Secure and Responsible AI: The Generative AI Security Scoping Matrix

“Sunny days wouldn't be special if it wasn't for rain. Joy wouldn't feel so good if it wasn't for pain.” - 50 Cent

Almost a year ago, the Generative AI Security Scope framework was published. As we’ve worked through positioning our GenAI capabilities and services within the market in 2024, we have seen some patterns of consumption emerge that impact design considerations and have helped us communicate security models for each scope to alleviate customer concerns, enabling innovation.

As organizations integrate these advanced AI models, ensuring their security and responsible deployment becomes paramount. This article explores strategies for designing and deploying accountable and secure AI solutions, leveraging a structured approach incorporating governance, compliance, legal, privacy, risk management, controls, and resilience.

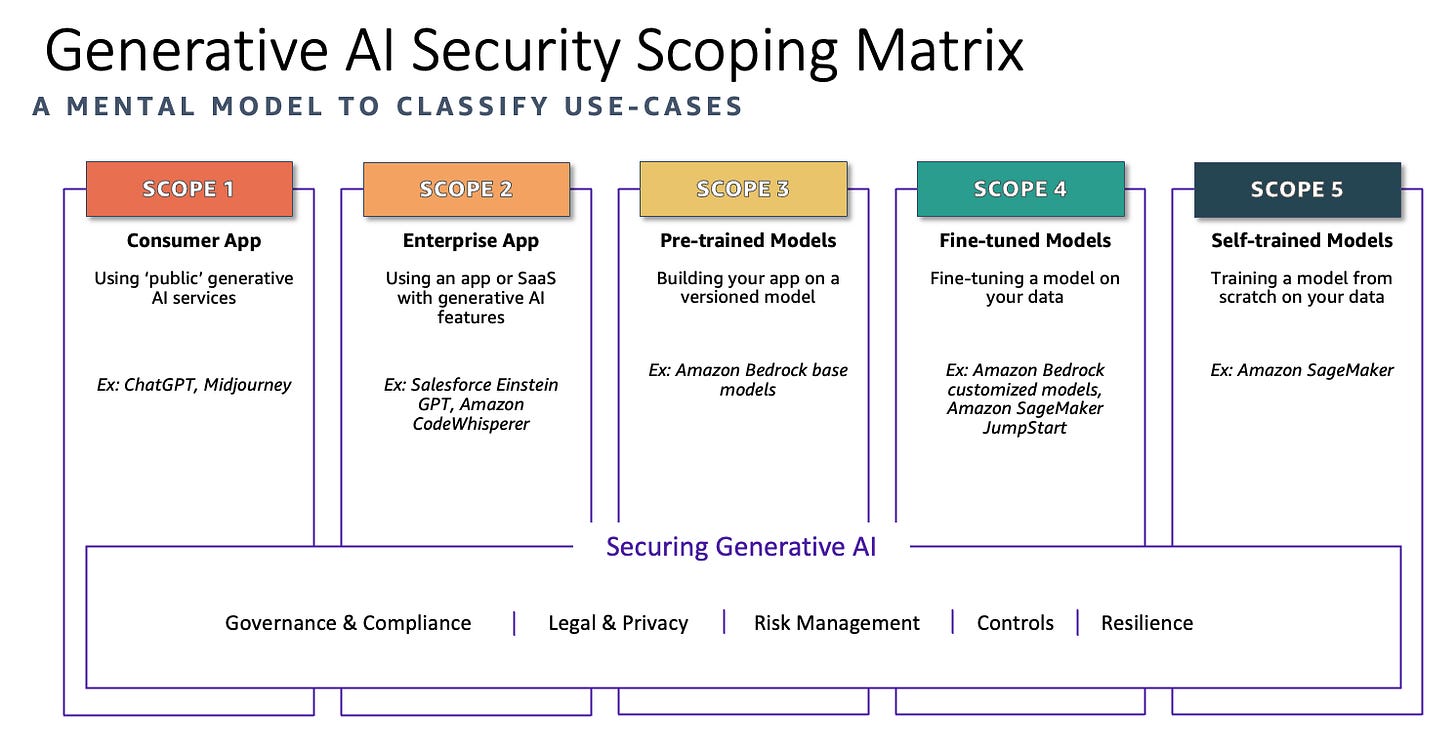

Understanding the Generative AI Security Scoping Matrix

The Generative AI Security Scoping Matrix serves as a mental model to classify use cases and understand the security implications associated with different generative AI applications. The matrix categorizes AI applications into five scopes based on the level of ownership and control:

Consumer App (Scope 1)

Scope 1 encompasses the use of public generative AI services, such as chat applications and other readily available AI tools provided by third parties. These applications are often accessible via web interfaces or APIs, allowing users to interact with generative AI models without the need for deep technical expertise or extensive infrastructure investments. While these services offer significant convenience and accessibility, they also come with specific security and compliance considerations. Organizations leveraging public generative AI services must carefully evaluate the terms of service, data handling practices, and security measures employed by the service provider. Ensuring that sensitive or proprietary data is not inadvertently shared or exposed through these public platforms is paramount.

From a security perspective, the primary responsibility of the organization using Scope 1 applications is to manage and control the data inputs and outputs. This includes implementing policies that restrict the use of personally identifiable information (PII) and other sensitive data in interactions with the AI service. Additionally, organizations should monitor and audit the use of these services to detect and respond to any anomalous activities or potential security incidents. By adhering to best practices for data governance and maintaining a clear understanding of the public service's security posture, organizations can mitigate risks and harness the benefits of generative AI technologies responsibly.

Tooling examples in this Scope:

ChatGPT: A conversational AI model developed by OpenAI, used for generating human-like text based on user input.

MidJourney: An AI-powered image generation tool that creates artwork based on textual descriptions.

Google Bard: A generative AI service provided by Google, capable of creating text, answering queries, and generating content.

Enterprise App (Scope 2)

Scope 2 involves the use of enterprise applications that have generative AI features embedded within them. These applications are typically provided by third-party vendors and integrated into an organization's existing infrastructure to enhance business processes, productivity, and decision-making. Examples include advanced customer relationship management (CRM) systems, enterprise resource planning (ERP) software, and other business applications that utilize generative AI to automate tasks, provide insights, and improve user experiences. By incorporating generative AI capabilities, these applications can, for instance, generate automated meeting agendas, draft emails, offer intelligent recommendations, and streamline workflow processes.

When using enterprise applications with embedded generative AI features, organizations must address several critical security and compliance considerations. First, it's essential to thoroughly vet the third-party vendor's security practices, including their data handling and storage procedures, to ensure they meet the organization's security and compliance requirements. Organizations should work closely with their legal and procurement departments to understand and negotiate the terms of service, licensing agreements, and data usage policies. Additionally, they should implement robust data governance frameworks to control how data is accessed, shared, and processed within these applications. This may involve setting strict access controls, monitoring usage patterns, and ensuring that only authorized personnel can interact with the AI features. By taking these precautions, organizations can leverage the power of generative AI within enterprise applications while maintaining a strong security posture and adhering to regulatory requirements.

Tooling examples in Scope 2:

Salesforce Einstein GPT: Integrates generative AI capabilities into Salesforce's CRM platform to automate and enhance customer interactions and sales processes.

Microsoft Dynamics 365 Copilot: Embeds generative AI features into Microsoft’s ERP and CRM solutions to assist with tasks like drafting emails, generating reports, and providing insights.

Oracle Digital Assistant: Incorporates AI-powered conversational capabilities into Oracle’s suite of enterprise applications to improve customer service and streamline business operations.

Pre-trained Models (Scope 3)

Scope 3 involves building applications using pre-trained generative AI models provided by third-party vendors. These models have been trained on vast datasets and are ready to be integrated into various applications via APIs or other interfaces. By leveraging pre-trained models, organizations can significantly reduce the time and resources required to develop AI capabilities from scratch. These models are typically versioned, ensuring that updates and improvements can be systematically tracked and applied. Examples of such pre-trained models include large language models for natural language processing tasks, image generation models, and other specialized AI tools designed for specific functions.

Using pre-trained models offers several advantages, including access to cutting-edge AI technology and the ability to quickly implement sophisticated AI features within applications. However, it also introduces unique security and compliance challenges. Organizations must carefully assess the data used to train these models to ensure it aligns with their privacy and security standards. Additionally, they should implement robust access controls to manage how the models are utilized and to prevent unauthorized access or misuse. Monitoring and logging interactions with the AI models can help detect and respond to potential security incidents. By adopting a comprehensive approach to security and governance, organizations can effectively leverage pre-trained models to enhance their applications while maintaining compliance with relevant regulations and safeguarding sensitive data.

Example tools in Scope 3:

OpenAI GPT-4 API: Provides access to the powerful GPT-4 model, allowing developers to integrate advanced language understanding and generation into their applications.

IBM Watson: Offers a range of pre-trained models for various AI tasks, including natural language processing, image recognition, and more.

Hugging Face Transformers: A library that provides access to numerous pre-trained models for NLP, including BERT, GPT-4, and others, which can be easily integrated into custom applications.

Fine-tuned Models (Scope 4)

Scope 4 involves the fine-tuning of pre-trained generative AI models using specific data from an organization. This process tailors the model to suit better the unique needs and nuances of the organization’s operations, products, or customer interactions. Fine-tuning can significantly enhance the performance of generative AI applications by incorporating domain-specific knowledge and improving the model’s accuracy and relevance. For instance, a customer support chatbot can be fine-tuned with historical customer service interactions, enabling it to provide more accurate and contextually appropriate responses. Similarly, a marketing content generator can be refined with the organization’s branding guidelines and past campaign data to produce on-brand materials.

While fine-tuning offers substantial benefits, it also presents distinct security and compliance challenges. Organizations must ensure that the data used for fine-tuning is appropriately classified and protected, as this data often includes sensitive information such as proprietary business data or personal customer information. Implementing strong data governance policies is crucial to manage access to this data and to ensure it is used ethically and securely. Moreover, it is essential to maintain transparency and traceability in the fine-tuning process to comply with regulatory requirements and to facilitate audits. Organizations should also consider the implications of model outputs and implement mechanisms to monitor and validate the generated content, ensuring it aligns with ethical standards and does not inadvertently disclose sensitive information. By addressing these considerations, organizations can leverage fine-tuned models to achieve more effective and customized AI solutions while maintaining robust security and compliance practices.

Tooling examples in Scope 4:

Amazon SageMaker: Allows organizations to fine-tune pre-trained models with their data to create customized AI solutions tailored to specific business needs.

Azure AI Studio: Provides tools for fine-tuning pre-trained models, enabling businesses to adapt models to their datasets and requirements.

Google Vertex AI: Facilitates fine-tuning pre-trained models with enterprise data to develop specialized AI applications.

Self-trained Models (Scope 5)

Scope 5 represents the most advanced and involved approach to deploying generative AI, where organizations train models entirely from scratch using their data. This method provides the highest level of customization, allowing the AI model to be deeply integrated with the organization's specific requirements, industry knowledge, and proprietary data. Training models from scratch can be particularly advantageous for companies operating in highly specialized fields where pre-trained models do not adequately capture the complexities or subtleties of the domain. For example, an organization in the healthcare sector might develop a generative AI model trained on a vast corpus of medical literature and patient data to assist in diagnostics and personalized treatment plans.

However, developing self-trained models also introduces significant security, ethical, and logistical challenges. The process demands extensive computational resources and access to high-quality, comprehensive datasets. Organizations must implement rigorous data governance frameworks to ensure that the data used for training is accurate, representative, and compliant with privacy regulations. This includes anonymizing sensitive data and obtaining necessary consent. Additionally, developing these models necessitates robust security measures to protect the training data from breaches and unauthorized access. Continuous monitoring and validation of the model's outputs are crucial to identify and mitigating biases, ensure ethical use, and maintain high-performance standards. By addressing these challenges with a comprehensive strategy, organizations can harness the full potential of self-trained models to drive innovation and achieve strategic objectives.

Key Security Disciplines for Generative AI

The following security disciplines are essential for securing generative AI applications:

Governance and Compliance

Establish policies and procedures to manage risks and ensure compliance with regulatory requirements.

Define data governance strategies to control the use of data in generative AI applications, especially in Scopes 1 and 2, where third-party services are involved.

Legal and Privacy

Address legal and privacy concerns, including data sovereignty, end-user license agreements, and GDPR compliance.

Implement policies to prevent the use of sensitive data, such as Personally Identifiable Information (PII), in generative AI applications.

Risk Management

Conduct risk assessments and threat modeling to identify and mitigate potential threats.

Be aware of unique threats like prompt injection attacks that exploit the free-form nature of large language models (LLMs).

Controls

Implement identity and access management (IAM) controls to enforce least privilege access.

Utilize application layers and identity solutions to authenticate and authorize users based on roles and attributes.

Resilience

Design for high availability and disaster recovery to ensure business continuity.

Use resilient design patterns such as backoff and retries, circuit breaker patterns, and multi-region deployment strategies.

Applying Security Practices to Generative AI Workloads

Security practices for generative AI should build on existing cloud security principles while addressing the unique aspects of AI workloads. Core disciplines like IAM, data protection, application security, and threat modeling remain critical. However, additional considerations must be made for the dynamic and evolving nature of generative AI models.

For example, in Scopes 3, 4, and 5, where organizations build, fine-tune, or train models, it is crucial to understand the data classification and the potential risks of embedding sensitive data into models. Techniques like Retrieval Augmented Generation (RAG) can help mitigate risks by using just-in-time data retrieval rather than embedding data directly in the model.

Conclusion

Designing and deploying secure and responsible AI requires a comprehensive approach that incorporates governance, compliance, legal, privacy, risk management, controls, and resilience. By leveraging the Generative AI Security Scoping Matrix and established cloud security practices, organizations can effectively manage the security and ethical implications of their generative AI applications.

The journey to secure generative AI is ongoing. Organizations must stay informed about emerging threats, continuously update their security strategies, and collaborate across teams to ensure the safe and responsible use of AI technologies.